Social media is one of the biggest online platforms that people use to share and express their opinions by uploading content, a picture, or even commenting on someone else’s. With the help of social media, people are now easily able to stay informed of current events.

Earlier, people used to stay informed about what’s going on in their surroundings or society by using traditional media such as newspapers, radio, etc. But now, with the rising scope of social media from traditional media, it is becoming very readily possible for a large group of audience to be aware and share their personal views.

Earlier, social media was used by people mainly for connecting with a different group of individuals from other regions or for sharing their opinions, or for even a little bit of show-off about their social life. But now, it is also used by various businesses and organizations to promote their brands and commercial activities to attract and engage with customers.

As discussed above, people can easily and freely upload anything on their accounts on social media or social networking sites that other users can easily see. Taking advantage of this, many people misuse such online platforms by uploading their offensive contents and sharing them with a vast audience.

Here comes controlling of such offensive contents to keep them away from the regular users. Such unpleasant or objectionable content can be about indecent images, videos, abusive languages, violence, etc., easily disturbing readers from different age groups.

Moderation refers to monitoring and managing the content from various online platforms like social media networking websites. It is called social media content moderation, where different types of offensive and not suitable for normal users are moderated.

In this moderating, the user-generated content on social media platforms like Twitter, Instagram, Facebook, Tumbler, etc., which are offensive or obnoxious and unsuitable for all age groups, are moderated.

The genuine need for content moderation is at social media platforms where the public has complete freedom to post anything according to their wish. They are free to share their views, experiences, or even feedbacks that other users can easily view.

With the help of content moderation services like image and video moderation, such content on online platforms can be controlled. They are closely monitored by experts who are known as content moderators or in case of social media; they’re called social media moderator who checks them and decides whether to allow such content or remove it. Choosing a content moderation outsourcing provider can help you tackle away all the bottlenecks your organization may face at a cheaper cost with great quality.

AI-based content moderation system automatically reviews content uploaded by any user. If something is found offensive or objectionable, it is sent for approval. When it arrives to content moderation social media, it is meticulously reviewed to either make it visible to every user or remove it from the user’s account and take actions like blocking them with a given warning.

Moderation made easy. Contact us to keep your community safe and happy.

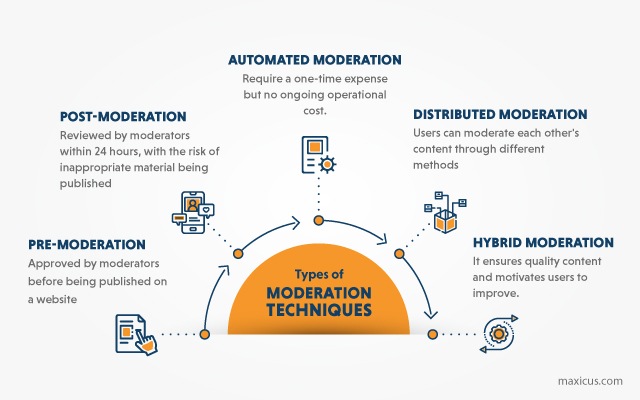

Moderation mechanism is a method where the moderator reviews posts made by a user to the websites for their quality of content about spam, obscene, insulting, and illegal or inciting to violence of any forms. The moderator has to decide on the type of content that is to be on the website depending upon the intended audience. After this is done, the moderator will delegate this to lesser moderators for further moderations so that there are no chances of trolling, or spamming in the content. There are four types of moderation:

In this type of moderation, all the contents undergo checking before these are uploaded onto the website. Pre-moderation gives greater control over the content before it is published. Here, after the user has posted the content, they will have to wait till their content is vetted by the social media moderator and uploaded on the website. This gives a setback where communication on a real-time basis is required. Another disadvantage is the high cost involved if user-generated content is of high volume.

Here, the user-generated contents are published in real-time but are moderated afterward within the next 24 hours. Such contents appear to the moderator in a queue for moderation. But this may cause some problems since there is no initial screening of the contents, which may contain inappropriate material.

Here, for moderation, no human intervention is required. All the user-generated contents undergo various tools like filters where there is a banned list of words or phrases that will be identified, starred, modified, or removed from the post. Another tool is the IP list of the banned IP address, where all the links from the prohibited IP address will be deleted. Such social media content moderation involves a one-time expense but no operational cost.

Here, the users are allowed to moderate each other’s contents. Distributed moderation is of two types: user moderation and spontaneous moderation or reactive moderation.

In user moderation, users are allowed to moderate any user’s content. This is possible where there are active numbers of large users. Each user is allocated some mod points so that they moderate each other’s contents up or down by one point. Such mod points are aggregated and bounded within the range of 1 to 5 issues. Then a threshold limit is determined from this score, and all those contents which lie at or above that threshold are displayed.

In spontaneous moderation, users spontaneously moderate the comments of their peers on a random basis. One variation of it is meta-moderation, where the users are allowed to negotiate the evaluation of other words. This is the second layer of moderation which attempts to increase fairness by allowing the users to rate the ratings of randomly selected posts.

There is also a mixed approach of moderation called Hybrid moderation. It’s a mix of all the types of moderation discussed above. When a registered user posts their content, it undergoes automated moderation. After this, the moderation process depends on the history of the user’s posts in the past. If the user has been consistently posting good articles without any issue, then here post moderation process will set in as there is a high probability of their post being of adequate quality.

If the user is new or there is no history of their previous post or submitted a poor post in the past, then the pre-moderation process will set in. That means their post will first be moderated, and then it will be uploaded. This motivates the user to submit good-quality posts.

Finally, all the posts are subjected to meta-moderation. In all such cases, there is a three-level of moderation to ensure good quality of content.

How effective content moderation can shape your customer experience?

Nowadays, businesses need to moderate the content on their social media websites as they are used to promote their business or their products and their brands. And if there is any objectionable content found on their websites, it can easily affect their business and disappoint their customers.

Social media is the perfect platform for any business or organization to expand its market space and attract possible customers. Any comment or review that a customer shares about the particular industry or their brand can easily affect their image either positively or negatively. Therefore, such comments and reviews play a massive role in building a company’s brand image.

But in case, if the content in the comment or review is offensive in some manner, then social media moderation techniques can play a huge role in helping out the businesses. It can help the companies by filtering out such offensive comments and reviews or posts before adding them to the website. This helps in increasing the audience engagement and interaction with the customers on the websites.

By moderating the content, the genuineness and originality of the brand are easily showcased which further adds to the safety and trustworthiness of the brand.

According to a report on Global content moderation solutions done by Data Bridge, it was revealed that the global content moderation solutions market is predicted to grow at a CAGR (compound annual growth rate) of 10.7% by the year 2026. (Source)

Social Media Content Moderation acts as a filter to remove harmful content of posts and to make it safe for the targeted audience. Unfiltered content has a high potential to play havoc of all sorts and thus raise the need to moderate these posts. As social media plays a very big role for those businesses, who leverage social media for their business growth, maintaining adequate quality standards of posts becomes highly desirable.